In my last post on running Ollama in my home, I detailed how I repurposed an Intel NUC as a mini-server to host Ollama, Docker services, Fedora Server, pi‑hole, and more (kevinquillen.com) for only $100.

With that server setup, I’ve successfully run models like:

- Gemma 4b & Gemma8b for general-purpose conversational and ideation tasks

- Codex models optimized for code generation and IDE-driven workflows.

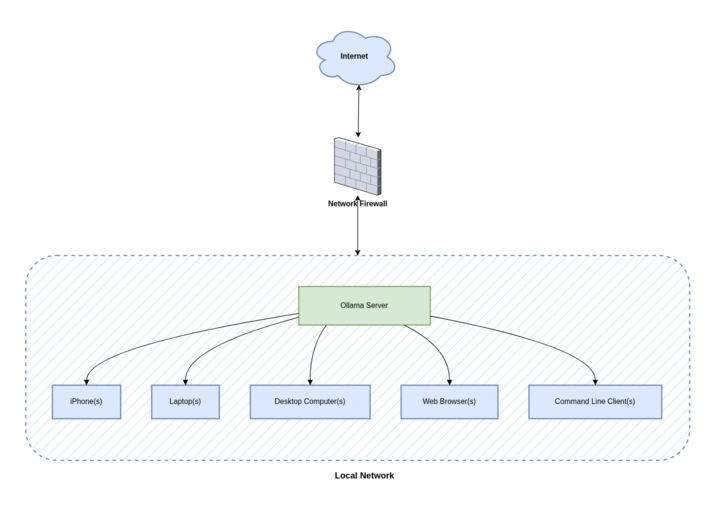

Being setup on my home network means that any PCs, IDEs, browsers, phones, or CLI terminals can access Ollama and use AI. No cloud dependency, no latency issues, serving your prompts and questions like ChatGPT. Granted, I made a very small investment in the hardware, so responses are not instant like ChatGPT online - but I am fairly confident if I stepped up with a beefier machine with a dedicated Nvidia GPU and 32-96GB of RAM, I would see faster performance. I knew this going into it, but nonetheless I have been having a lot of fun having my own private AI in my home.

This is the network setup we have:

We use an iOS app called 'Reigns' to connect to Ollama, which has a similar UI to ChatGPT. In our browsers, we added a plugin called PageAssist to connect to Ollama. For IDEs, I only use JetBrains based IDEs, but there are a handful of plugins that work with Ollama including the official JetBrains AI Assistant that ships with the IDEs. Additionally, I use Termius to SSH to the server from my phone or iPad if I need to administrate or add a new model to Ollama.

I thought this was exciting to be honest. I can imagine that there are several companies racing to provide in-home devices like this for the same purpose - an "Apple TV" like set top box that manages all this automatically. In five years time (maybe less) we will start seeing these on the market and in peoples homes.

I started thinking of other use cases for AI. One area I usually need a lot of help with is home improvement, pool management, and general needs (lawn care, etc). I also found it works well as a sous chef to bounce ideas off of in the kitchen.

For example, I can take a picture of an area of my lawn or exterior of my home, describe the issue I think I see along with the photo and ask Ollama (with a vision enabled model) what it thinks and assess how I can best approach the problem. If I am experiencing an issue with the pool pump system, I can give it the model of the control unit and brand name and get detailed assistance on what I should do. In each case, it gave a very good walk-through of the issue(s).

Last weekend I gave it images of a 7.1 sound system the previous owner left behind along with a subwoofer - it was able to identify the make, model and year of the subwoofer, how they wired it, how to identify which speaker is what (9 volt battery test), and if the stereo receivers I was shopping for would be compatible with that setup. I very much benefitted from this level of assistance as services and contractors charge a high dollar to tell you the same information. It helps me understand and take action, saving money in most of these cases.

These are practical, approachable tasks that any homeowner (or prospective buyer) can benefit from, all supported by a home‑deployed model. I am considering maybe integrating it with Home Assistant at some point for more interesting use cases. I look forward to upgrading this system down the road for better performance, but so far I found a lot of joy in this project for very little investment.